For my final I am thinking about pursuing two different ideas which I am going to explain deeper in detail:

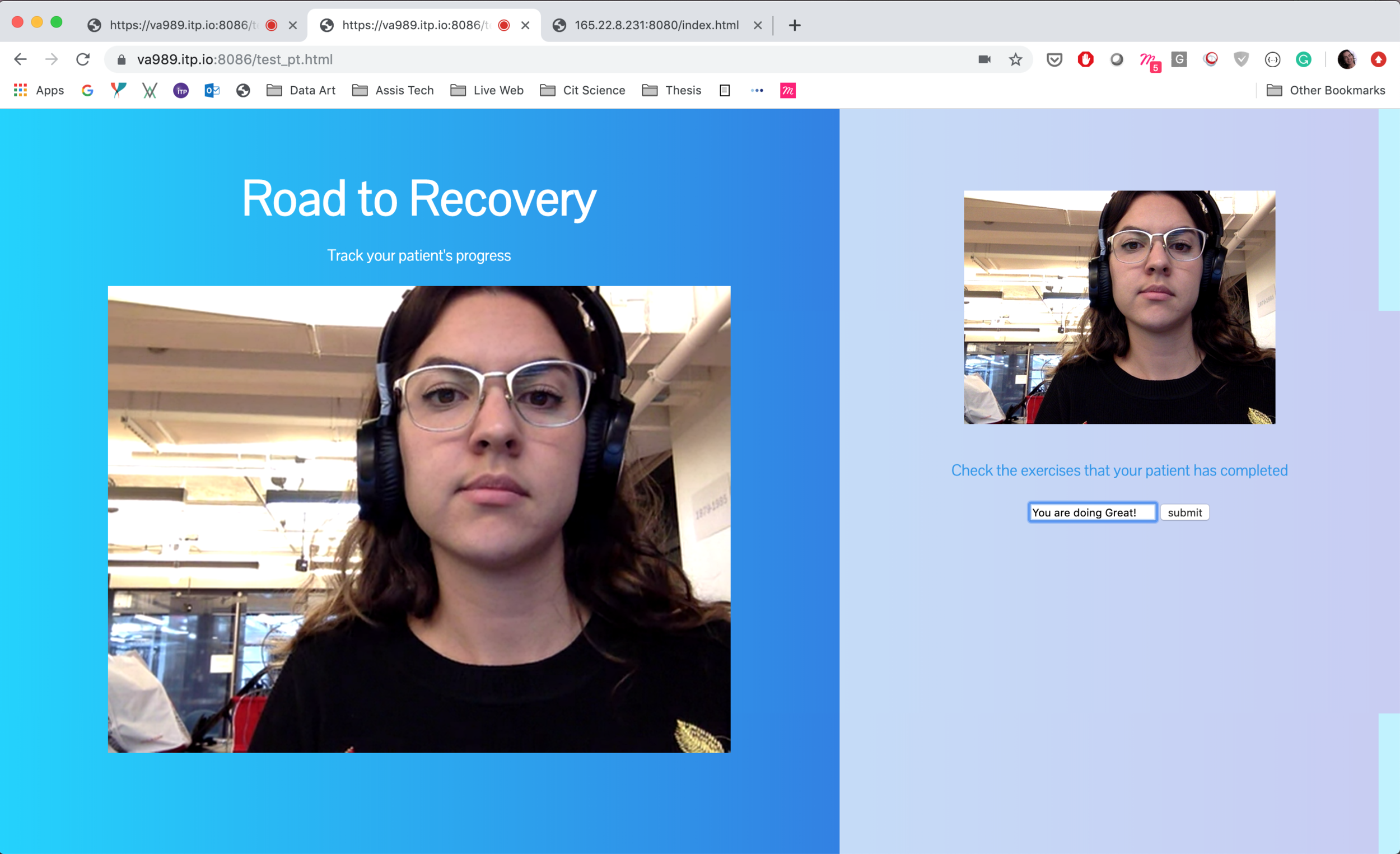

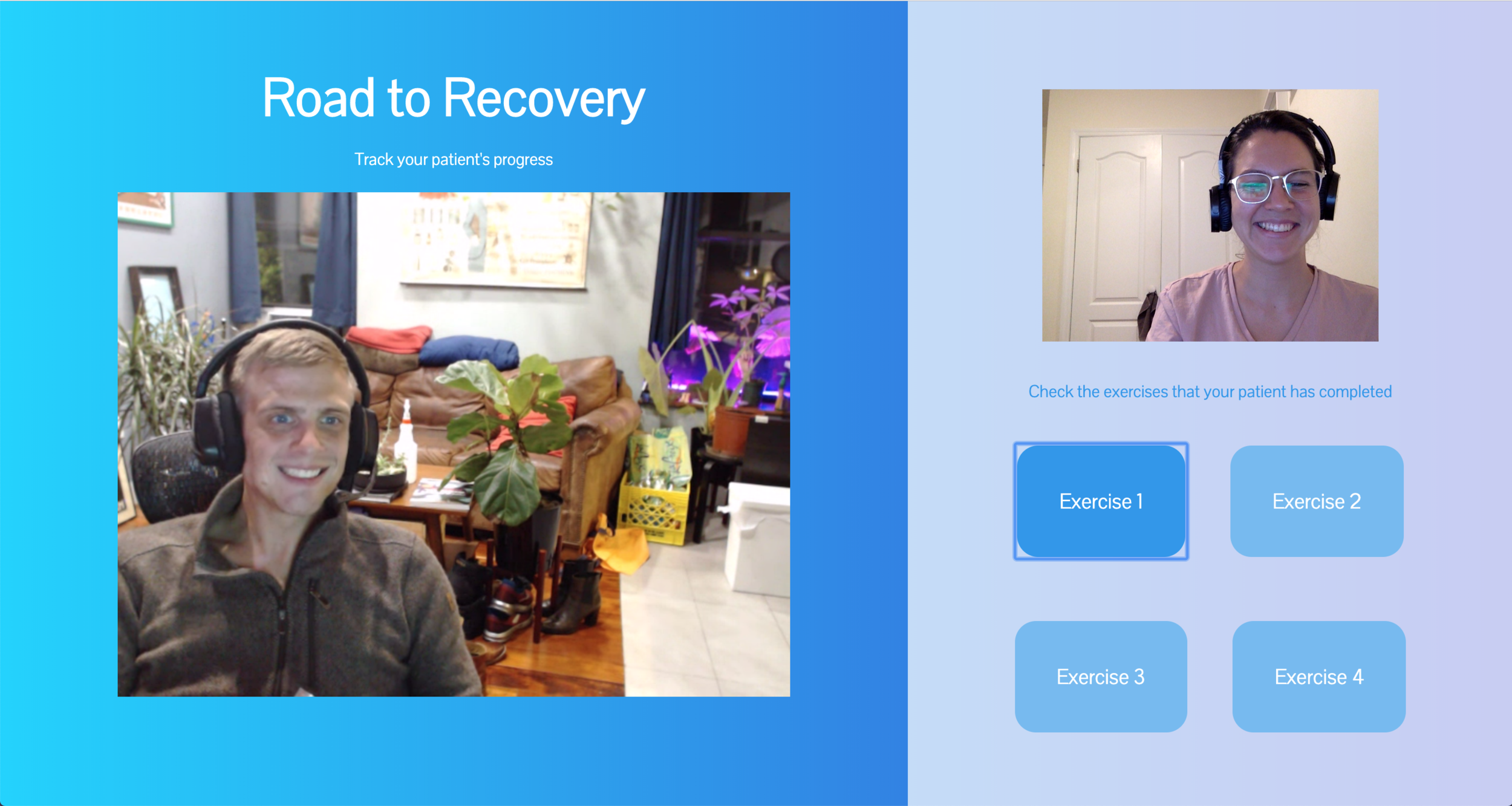

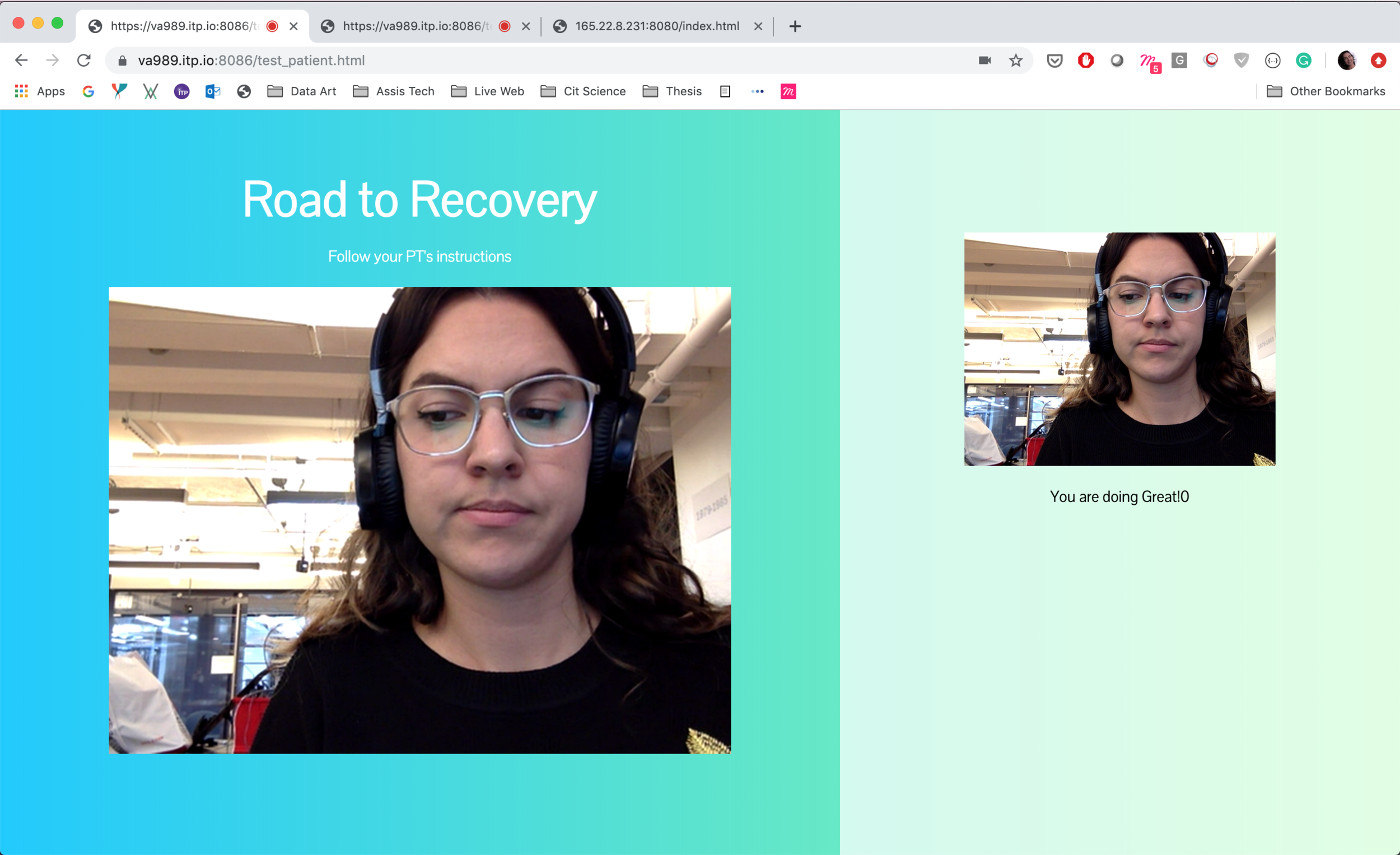

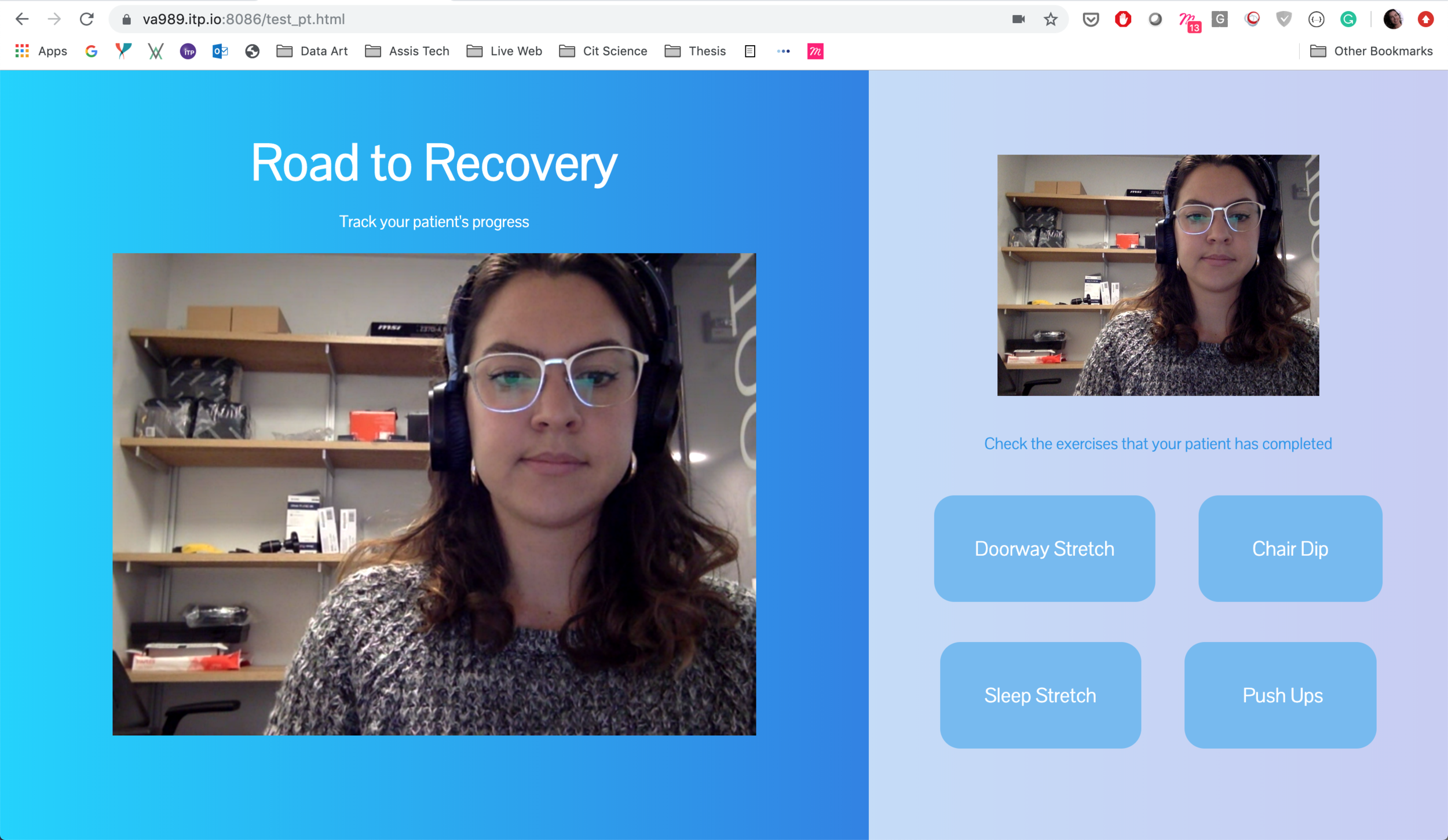

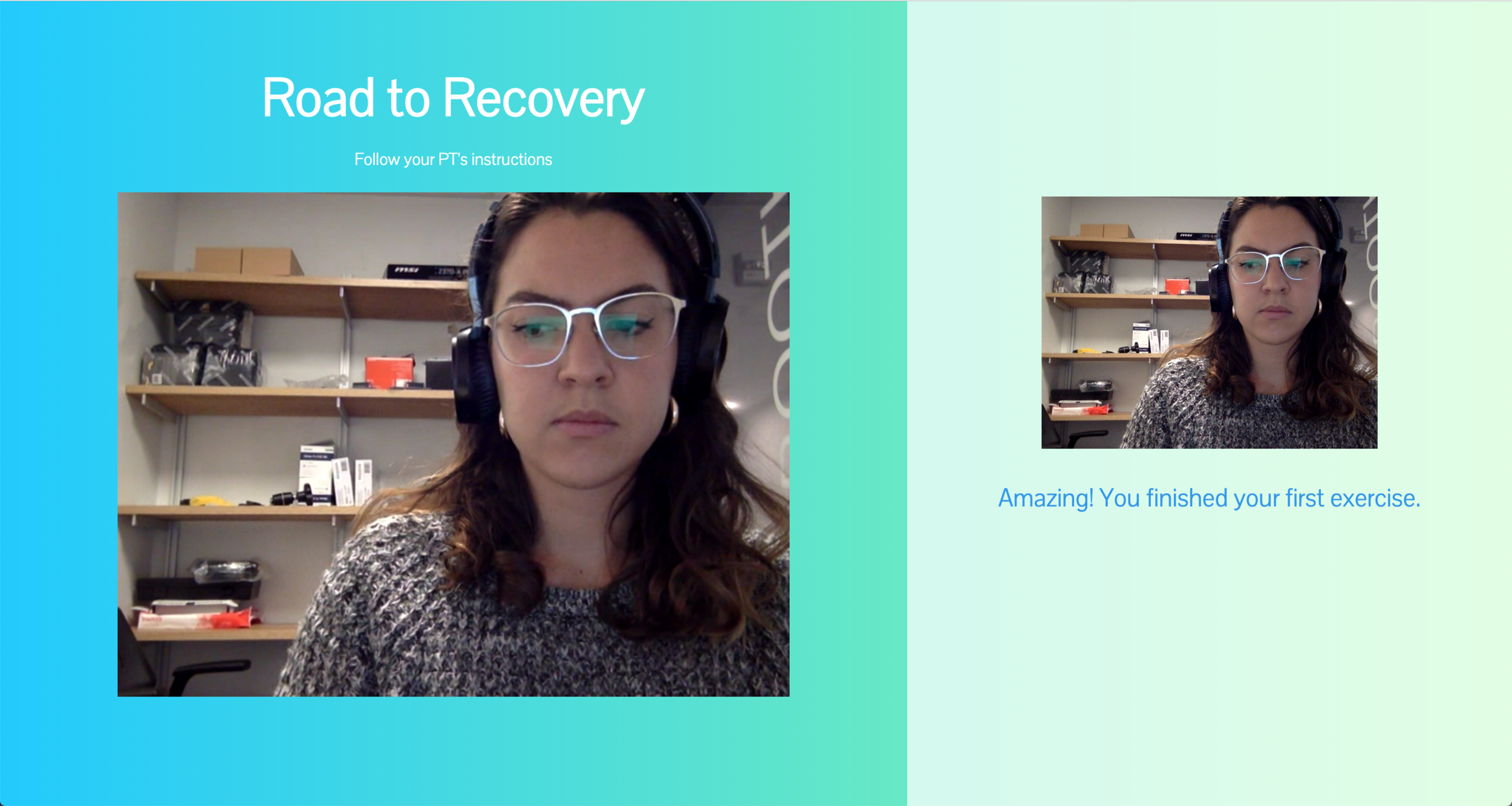

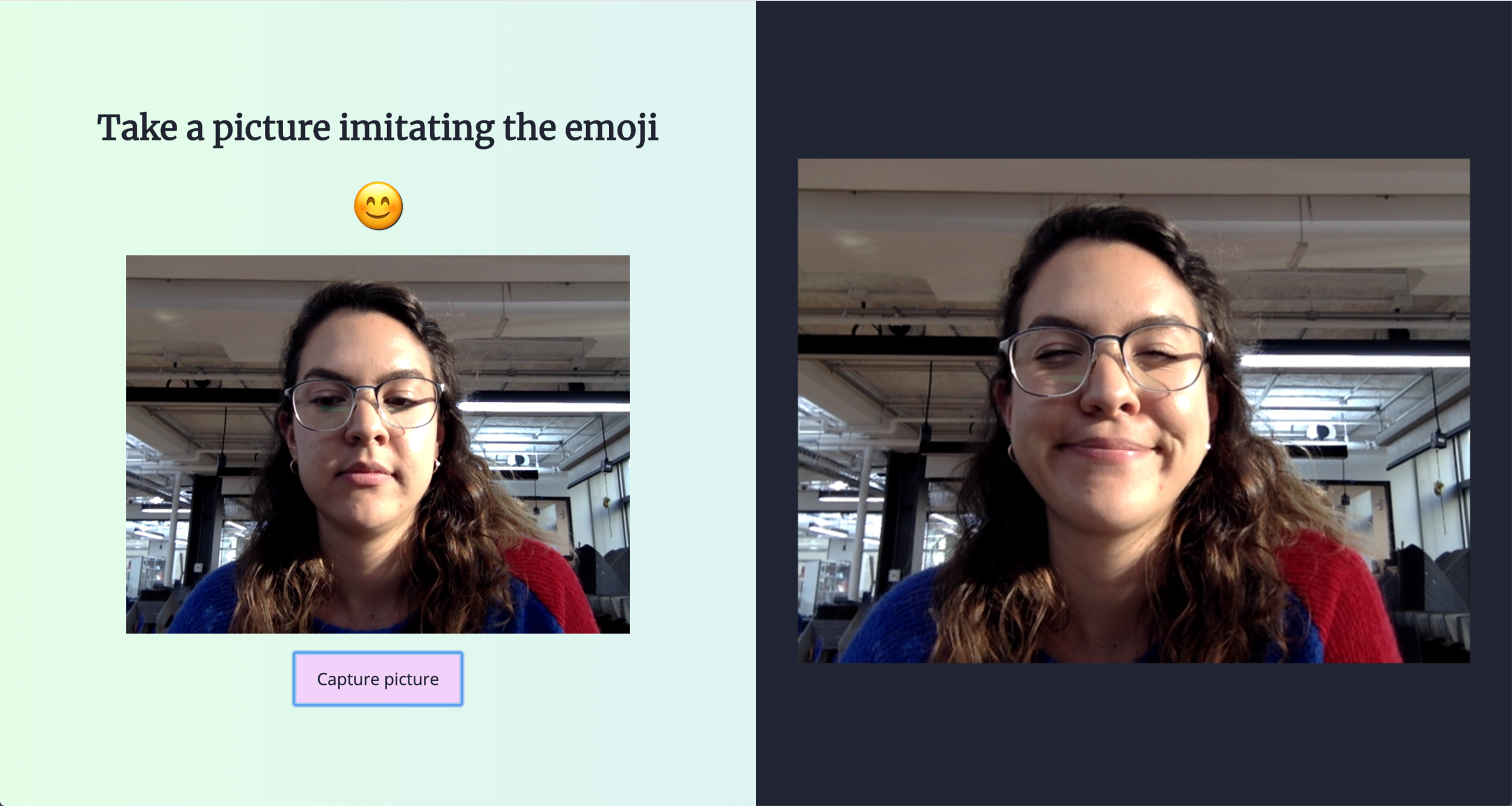

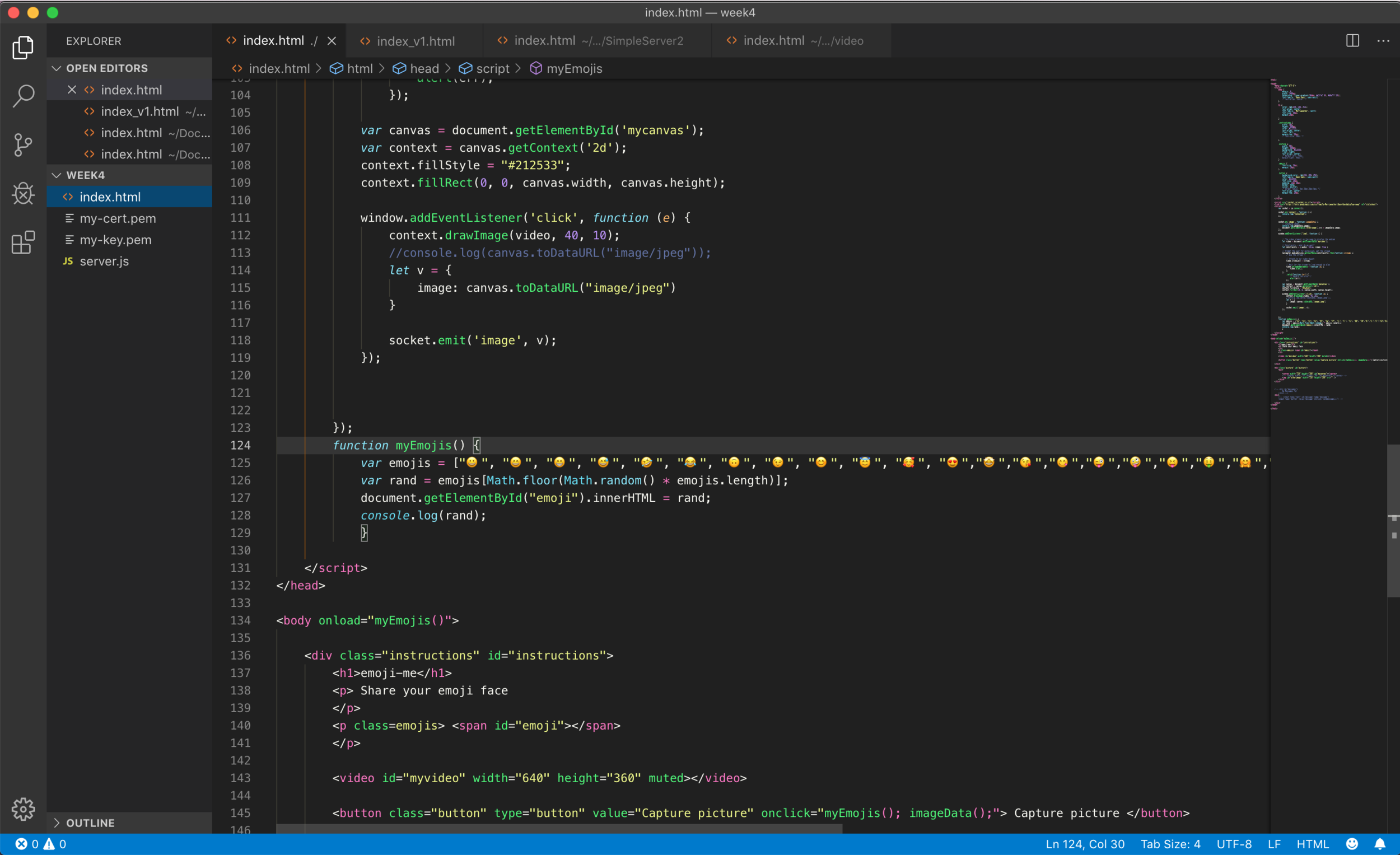

1. PT live video interface to guide and encourage patients to do their exercises

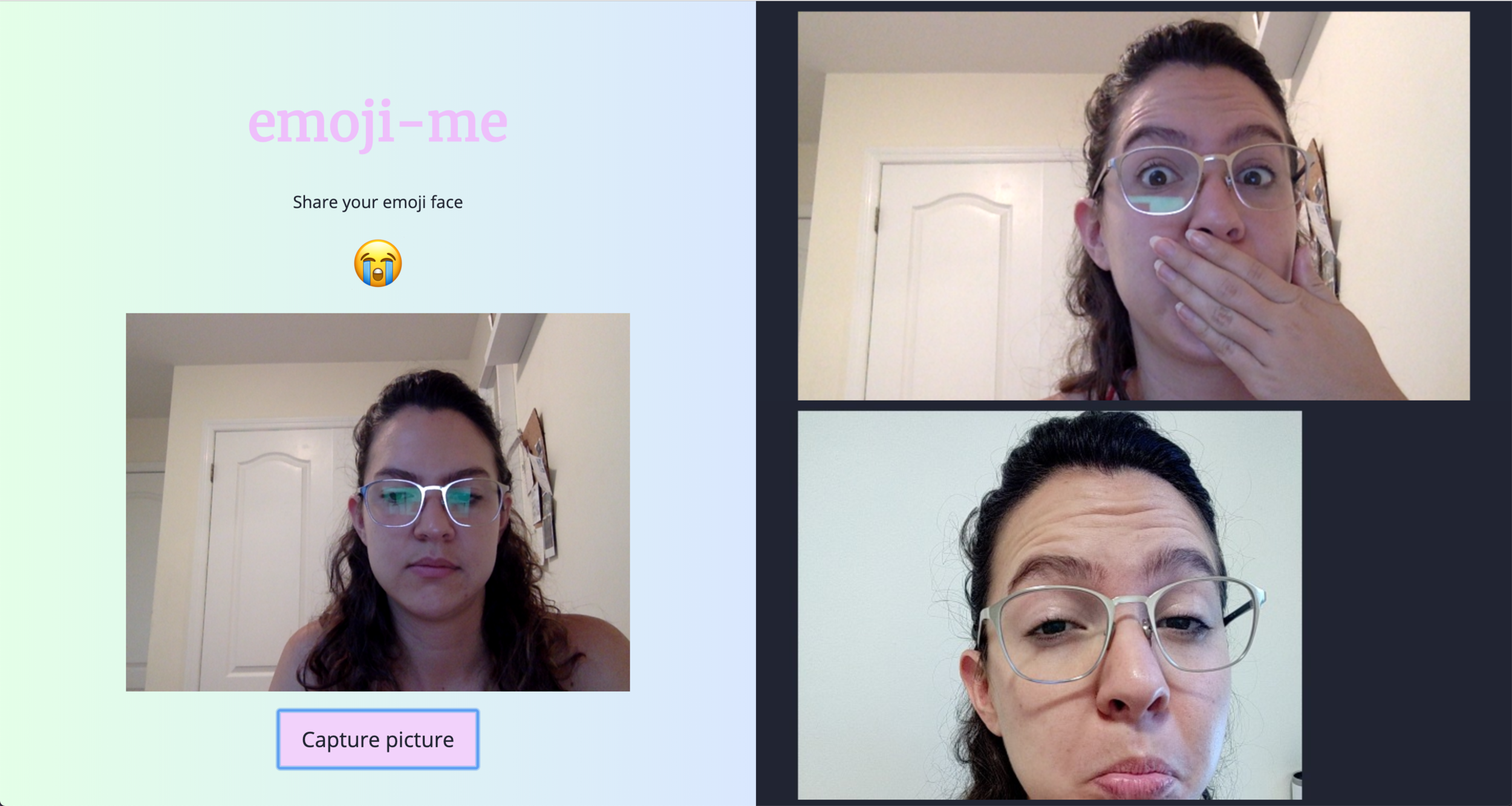

For my midterm assignment, I already started working around this idea of having a platform that will enable PT to guide their patients while they perform certain exercises. The main pain point that I would like to work on is the fact that patients lack motivation to perform their exercises, therefore, their condition gets worse over time.

I would like to create a platform in which the PT can track the progress of the user and have a way to motivate them by recording the videos of the first visits and be able to record short videos of their next appointments so that they can compare the progress over time.

This would be a continuation of my midterm assignment but this time I would like to be able to:

Collect data about the exercises performed.

Record short videos of the patient’s progress.

Be able to have a more intuitive and user-friendly interface.

2. Stress ball with breathing exercises app

For my “Developing Assistive Technologies” class I am designing an element that can be placed in the NYU Oral Health Center for People with Disabilities who might have Anxiety/stress and/or Sensory Processing issues (Autism, ADHD, etc).

Purpose of the Device: Calming tactile interface for patients who are waiting for their oral procedures and their caregivers. This device will be portable so it can be used inside the multisensory room and also it will be possible to use in the waiting area as a way of inviting both patients and caregivers to use the multi-sensory room. The device will be designed to be placed in the lap of the user so it will be accessible to everyone including people in wheelchairs.

Live Web Portion: By doing user testing of the first prototype of the device I have received feedback that this device might benefit from some guidance for the user to calm down, I would like to connect the stress ball to a visual interface that will allow the patients (or a user with stress or anxiety) to follow breathing patterns.

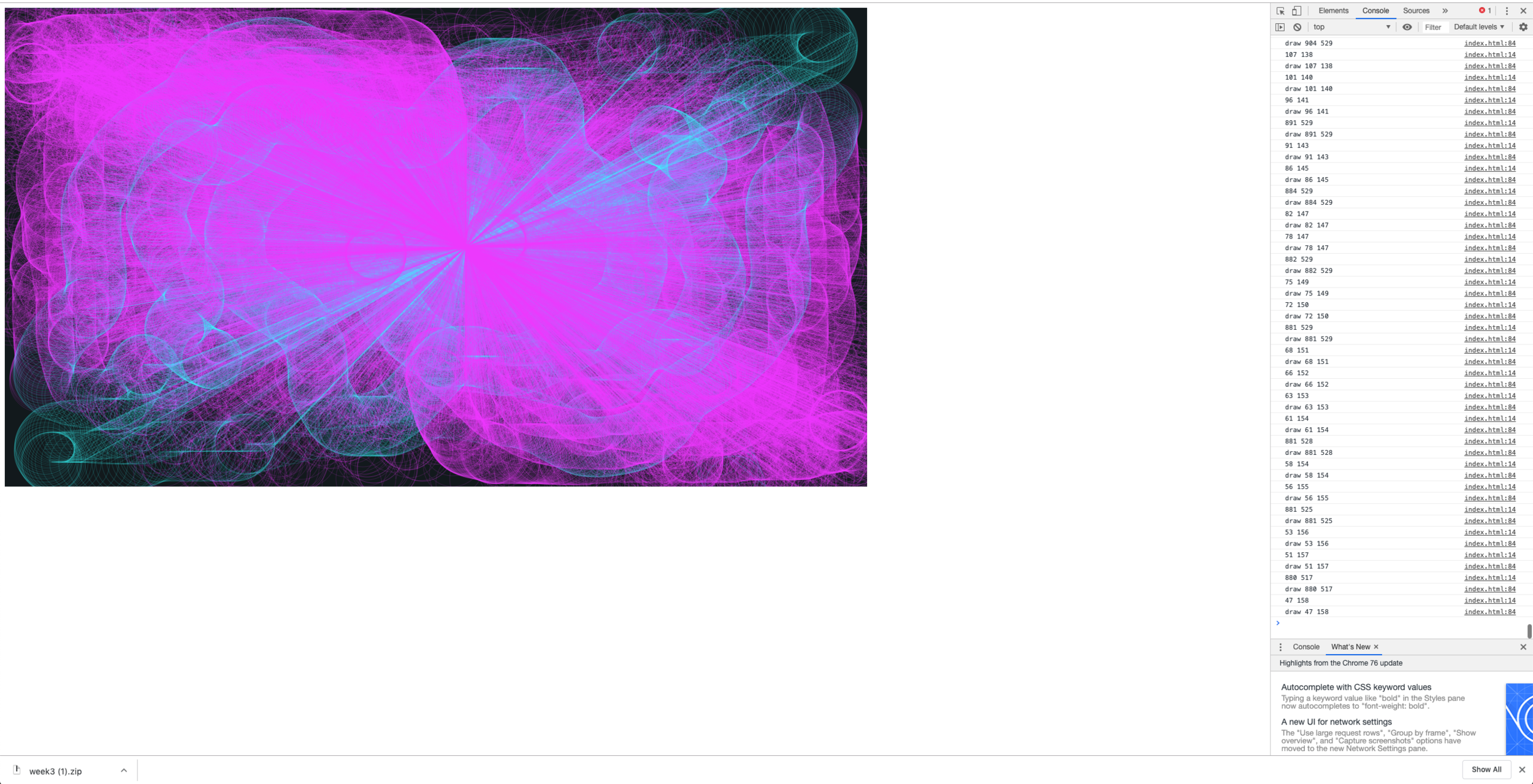

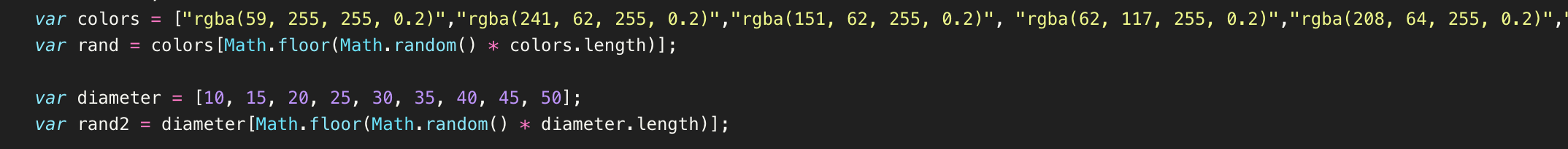

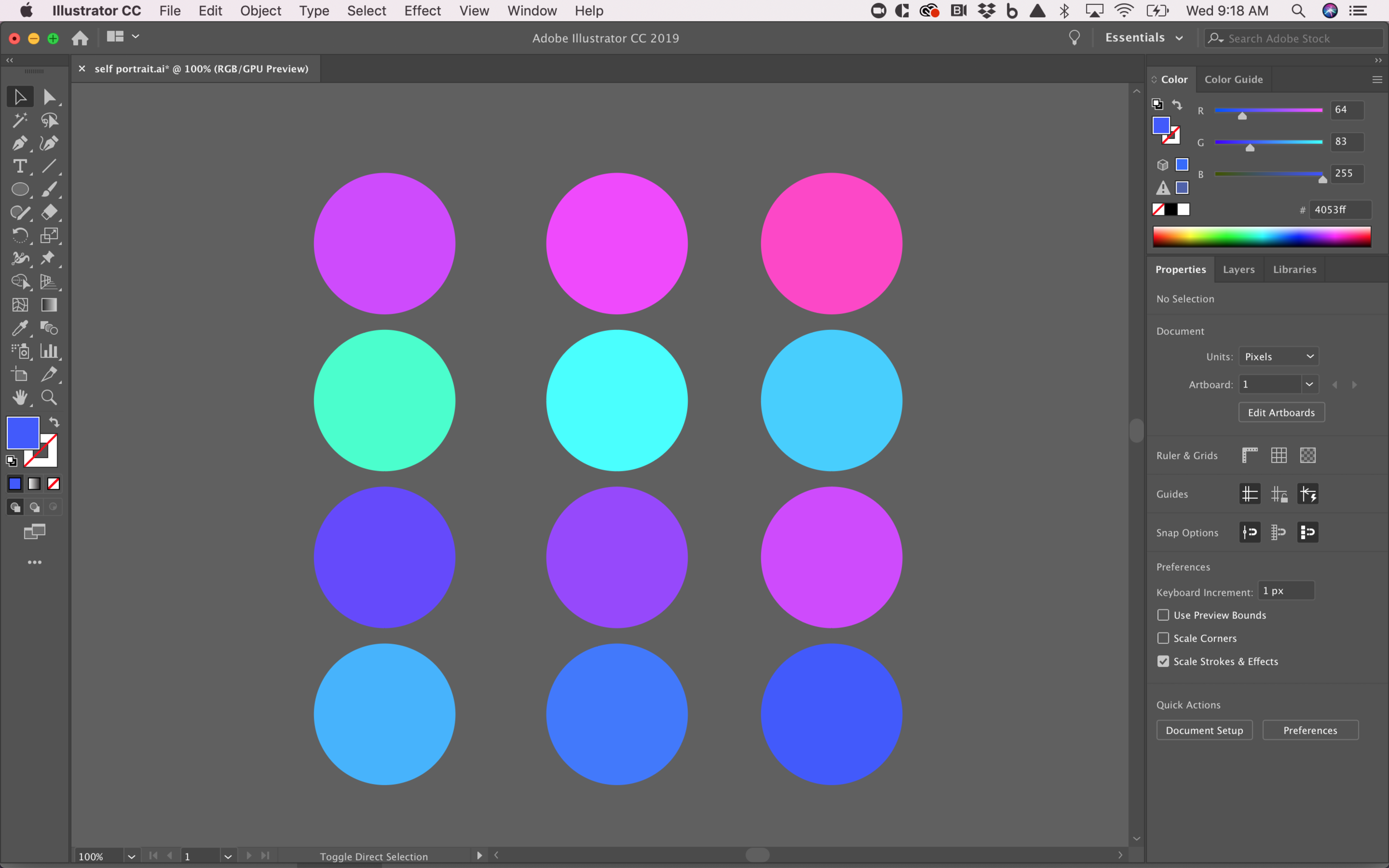

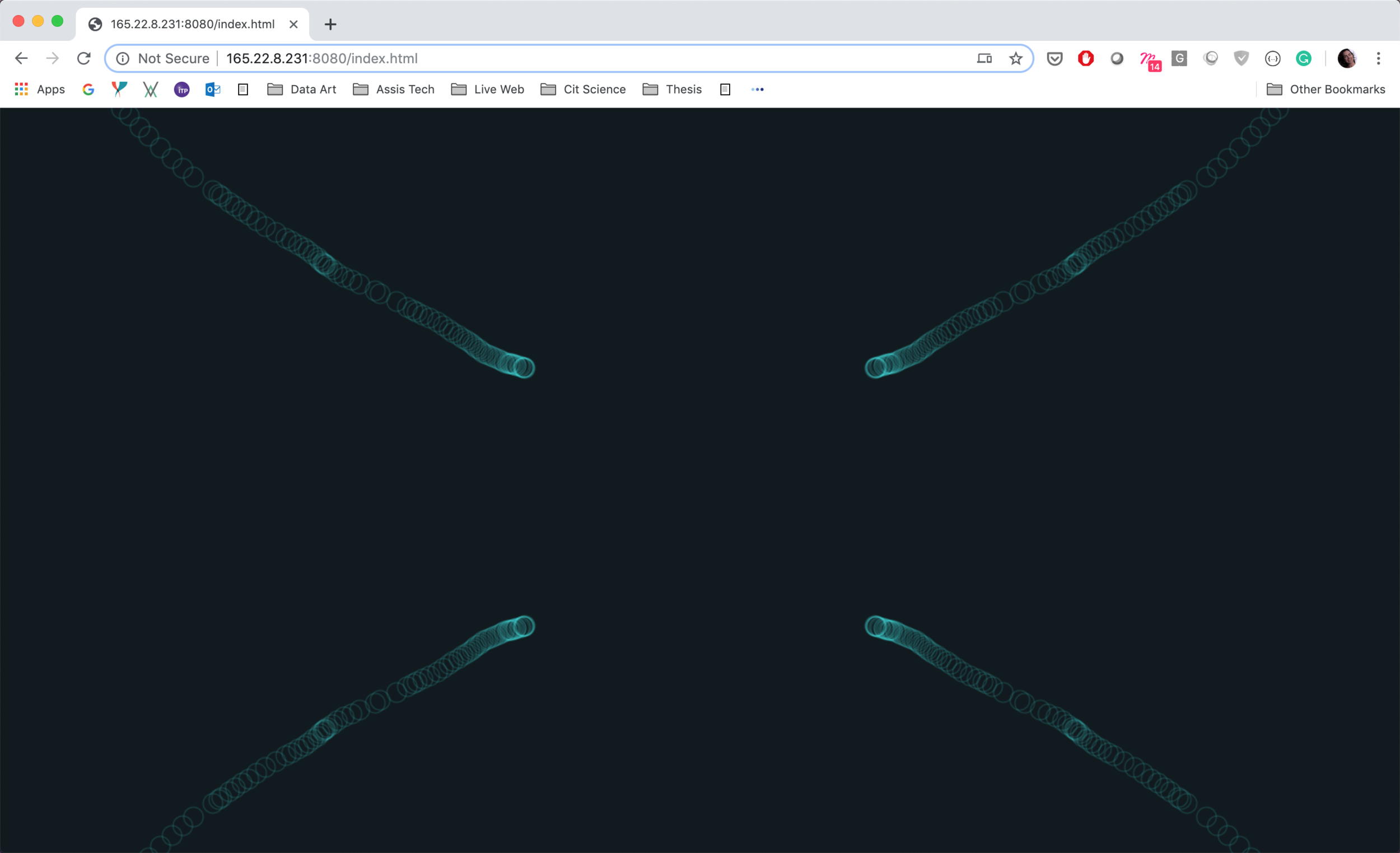

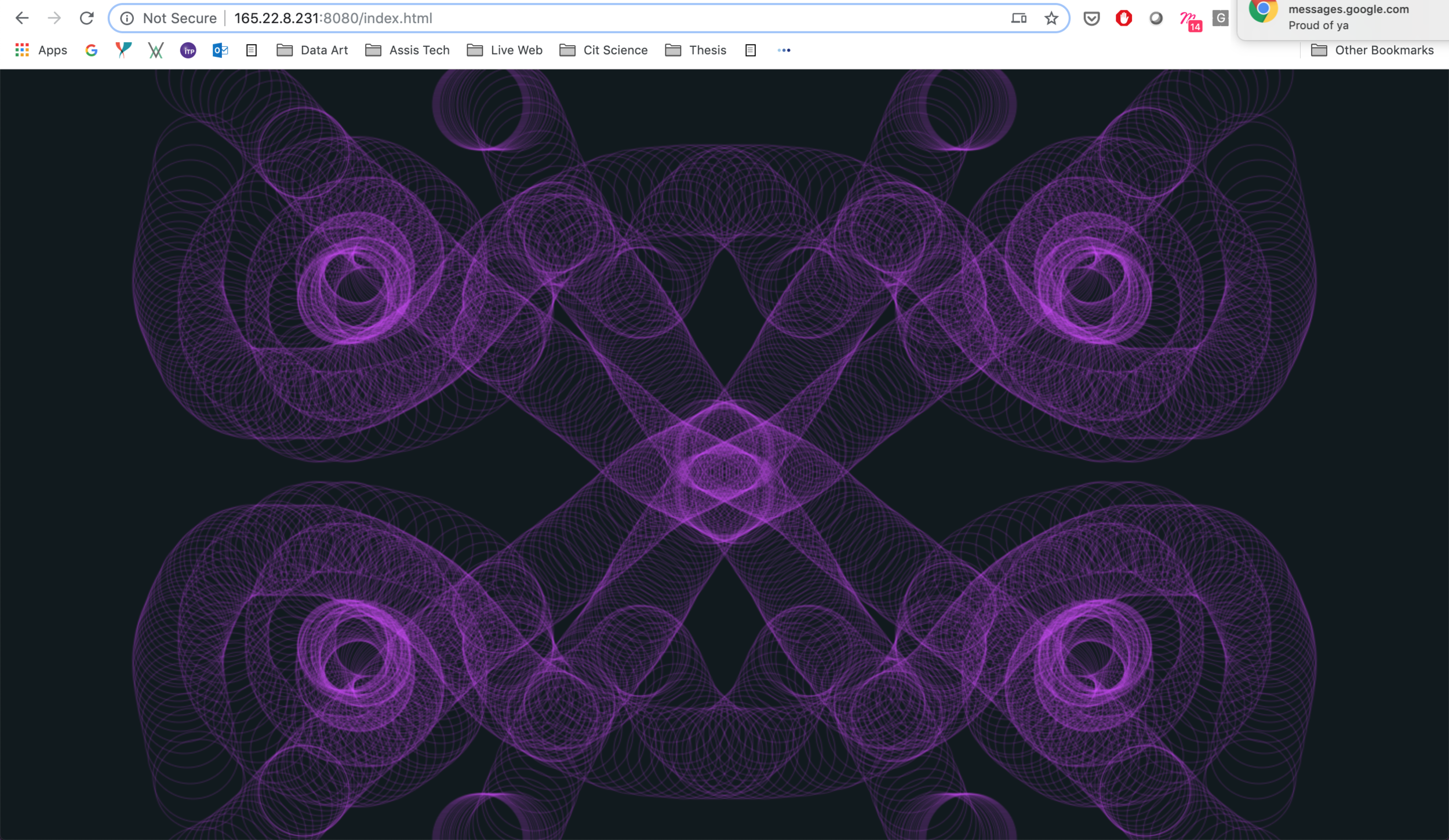

The website will have a breathing pattern that will be shown with a circular relaxing pattern in the screen when the circle gets bigger is time for the user to inhale, when the circle gets smaller, it’s time to exhale. The pattern will be calming and the rate of the breathing will be slow so that the users can relax. At the same time that the breathing visuals are happening the user will be prompted to squeeze the ball, which is covered by resistive fabric, which sends values to Arduino, I will define a threshold so that when the ball is squeezed when inhaling, the visual interface will have an indicator that the user achieved the breathing correctly.

The following is a video of the way the first prototype is working:

As an inspiration I would like to use apps like Calm that guides the user to breathe in certain patterns, however this will have a tactile interface connected to the UI so that the user follows the breathing exercises with the squeeze interaction for the stress ball in order to calm them down.

The following is a visual explanation on how the stress ball will be connected to the visual patterns in the screen:

Stress ball conncented to the computer

Visuals interaction