Breathe With Me

Breathe with me is a calming tactile interface for patients who are waiting for their oral procedures and their caregivers. This project started with the main goal of developing an assistive device that can be placed in the multi-sensory room at the NYU Oral Health Center for People with Disabilities. The project then was expanded to be a calming device for people with stress and anxiety.

The problem

Breathe with me is a calming tactile interface for patients who are waiting for their oral procedures and their caregivers.

Primary Population For Whom the Device is Being Developed: Adult patients of the NYU Oral Health Center for People with Disabilities who might have the following:

Anxiety/stress

Sensory Processing issues (Autism, ADHD, etc)

Secondary Population: Caregivers of the patients of the dentist’s office, staff of the office

The problem that “breathe with me” tries to solve is to provide user’s with a tactile interface that helps them release anxiety and stress.

Coming up with a solution

Physical Design:

The first phase of the development of this project was to define a shape and a functionality that I wanted to achieve, since this first phase was a collaboration we decided that we would focus on the tactile sense, developing a stress balls that would send values to the Arduino, I had different options from the physical computing side of the project, the original idea was to use an air pressure sensor however, I came across a stretch conductive fabric, as part of my “Textile Interfaces” class in ITP, therefore I decided to use that material to send values to the Arduino that later on I would use in order to dim a light using an LED ring.

User Interaction:

Once the physical computing phase was advances, I had the opportunity to develop a more finalized concept for the stress ball, by connecting it to a screen that would display a breathing pattern (the hexagon) and at the same time, the size of the circle in the screen would be dictated by how much pressure the user is applying to the stress ball, the main goal of the visual interface was to provide the user with a guide on how to breathe slowly and at the same time try to match the size of the hexagon by squeezing the ball.

Development phase:

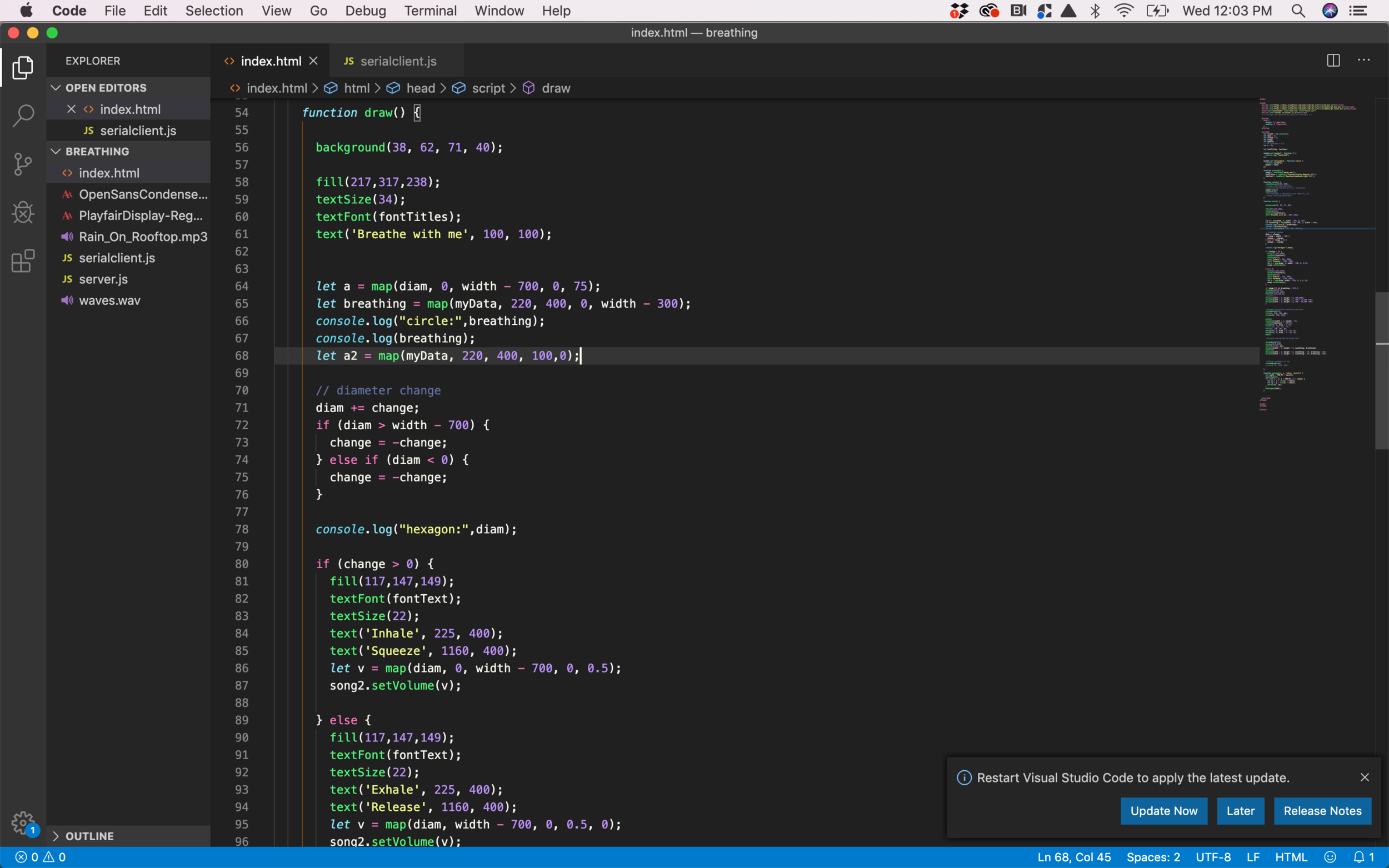

I started by coding in Arduino, which received the values from the stretch fabric and I mapped them to the brightness of the LED ring, once that functionality was finalized I started implementing the visual interface for the screen with the breathing pattern, in order to send the values from the Arduino to the visualization I used web sockets with a serial client script that received the values and used them to alter the visualization in p5.js.

I also added a relaxing sound file (waves on the ocean), the volume turns up when the breathing pattern indicates “Inhale” and it turns down when it says “exhale” so it works as a secondary guide for the users and it also helps for accessibility purposes.

User Testing:

A crucial part of this project is that we had the possibility to test the prototypes in different stages of the development in order to receive feedback from users and be able to implement it in the next iteration. Some of the most important portions of feedback that we received was that users enjoyed squeezing the ball that they mentioned that it would be beneficial to have some kind of visual guidance for a calming breathing pattern that they could follow. We also tested different sizes of the stress ball and different materials to cover it in order to make sure that the design would meet the user’s needs.

My Role:

The research process as well as the user testing was a collaboration for the class Developing Assistive Technologies. I took more ownership of the project since I continued individually for another class (Live Web) and my role consisted on all the physical design of the sphere, the fabrication, physical computing, coding and digital design of the visual interface as well as the sound implementation.

The Result:

The user squeezes the stress ball by following the breathing pattern shown in the screen and the goal is to match that pattern by inhaling and squeezing at the same time. The project focuses on the tactile sense as a priority because there is a lack of those applications in the room and therefore it is important to propose a design that will provide a calming and relaxing experience by using the tactile sense combined with the visual and sound senses as a secondary output.

This device is portable so it can be used inside the multi-sensory room and also it will be possible to use in the waiting area as a way of inviting both patients and caregivers to use the multi-sensory room. The device can be placed in the lap of the user so it will be accessible to everyone including people in wheelchairs.

This project started as a collaboration with Nicole Nimeth (IDM) and Gabrielle Caguete (OT), the project then was expanded to work as a peer collaborative breathing tool for my Live Web class in the third semester of ITP NYU.

Mentors:

Shawn Van Every

Dr. Ron Kosinski

Amy Hurst